I've been using and also experimenting with

Nexus Quality Suite on and off for the past 9 months and I've been meaning to write up a blog post about it. The trouble with reviewing this software suite is that it contains so much stuff, I am aware that I can only skim the surface. So I think I'll present it in small meaningful little task-oriented mini reviews. Initially I was running the tools in this suite on an extremely large Delphi system. While it's definitely useful for very large systems, I found it difficult to explain that usefulness using that large application.

So I've decided to keep my real world focus in reviewing this tool, but I'm picking a bit of my own personal code to profile and test. I'm going to run Nexus Quality Suite's tools against a little application I first wrote in about 1996, that is in my toolkit of "system admin and developer-operations" tools. Here's what it looks like:

It can ping any number of hosts from one to hundreds. When any of those hosts goes offline (does not respond to ICMP ping), or the DNS resolver stops resolving, this little tool can beep (for in office monitoring) or send an email (which can alert me even when I'm out of the office). But this tool has always been slow, slow slow. Since I add additional sleep time (configurable) between its runs, I've never worried about the performance of it, but I recently had a use for this tool again, so I dusted off the source code, added a few little things, and recompiled it in Delphi 10.1 Berlin. I even found a missed out "Unicode port" bug where I had forced a cast to AnsiString over a UnicodeString in a way that actually resulted in sending Unicode bytes into an ANSI Windows API. Bad Warren! No cookie for you! My only excuse is that I wrote the code in question in 1996, in Delphi 2, and simply overlooked it when porting this code to Unicode Delphi. Now back to my review...

Anyways, back to the performance profiling tools. The latest version of Nexus Quality Suite 1.60 supports both 32 bit and 64 bit programs, but I would recommend profiling your 32 bit tools, as the 32 bit tools are probably easier to profile. For those cases where you really want to profile 64 bit stuff now you can. The NQS installer installs a group of items in your tools menu. Be aware that certain Delphi versions have a bug, which has a workaround available, and that the installer for Nexus Quality Quite actually warns you about that. This is good customer service right here. Good job, Nexus, and thanks Andreas Hausladen.

Here's the installer warning. I have XE4, XE8, and 10.1 Berlin on my computer right now, and this is what I saw:

After installation, here's the menu items. There are too many tools in here to cover them all in one review, but I'm going to quickly show one application run through two of the tools.

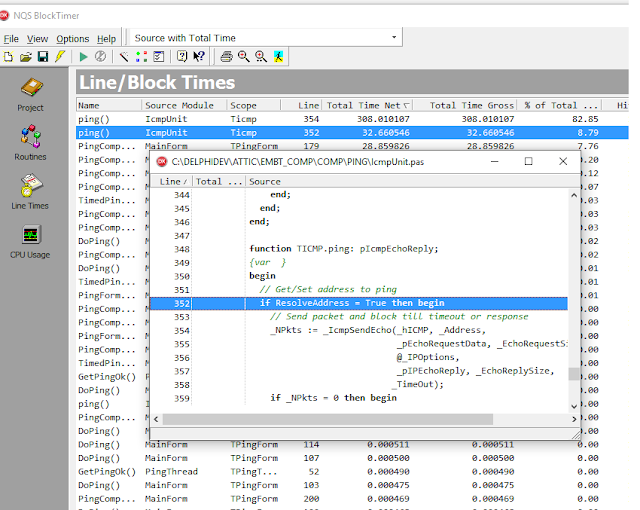

The first tool in this review is brand new, I think. The Block Timer application is a new profiler tool based on the other profiler tools, but with some new capabilities. I asked support and was told that more documentation is coming soon. The Block Timer joins its partner the classic Method Timer in providing some pretty great time-based profiling capabilities for your Delphi applications. Here is a summary of the features of the new block timer compared to the existing method timer and line timer profilers:

1. The block timer is thread aware, and can break down information into thread by thread values, whereas all times are combined for all threads in the other profilers.

2. The block timer can accurately report information about time spent in recursive methods.

3. The extra overhead of doing all that extra profiler makes the overhead of running the profiler a bit higher.

4. No dynamic profiling in this one. You loose the trigger feature from the LT profiler, which is an important feature. It's worth switching to LT when you need triggers.

So far it seems to me that in smaller applications, with fewer procedures selected for profiling, the application overhead of the most intensive techniques (BT) produces the most interesting results. The larger the application, and the larger the cross section of the application methods I want to test, the more the classic lower-overhead MT and LT profilers are useful.

Configuring your application to work with this or other profiler tools is pretty consistent, the same steps are necessary for this tool, and for any other sampling profiler or other runtime analyzer tools. Turn on TD32 debug symbols from Project Options, in the Linker tab, in older versions, or Debug Information in the newer ones, according to the docs.

Run the tool from the Tools menu. Note that it's a good idea on Delphi XE through XE6 to do a full rebuild before you click the tools menu item as Delphi doesn't rebuild the target for you on those versions.

You click one tool, and the first time you do, you will probably want to do a bit of configuration. Each tool requires some slightly different configuration. It is NOT a good idea in my opinion to profile ALL of any non-trivial application. First, because you're asking a lot of the NQS tool. Second because even if the tool can successfully gather information on 10 or 20 thousand methods, you probably can't do much with the results. I recommend doing a little searching and probing and find some routines that matter, and include those. The user interface is reminiscent of Outlook 2000 for most of the tools. In the case of the Block Timer and Method Timer, you use the Routines icon, which for some few releases has included a nice Search feature, which I think I requested, and I'm gratified to see that in there. Because my app is all about the Ping, I'm looking for the Ping methods, I want to know what they're up to...

After searching, then selecting the routines, I right click and "Enable Tracking for Selected" methods. Then I click the green triangle "play" icon to make my application-under-test start execution. In a small application you could perhaps select everything. But as I have learned from much experimentation, it's really better to spend a bit of time searching for methods you suspect to be relevant and enable a dozen or two dozen of those. Then drill in, and enable further layers of the code, as necessary, to get a clear picture of your system behavior.

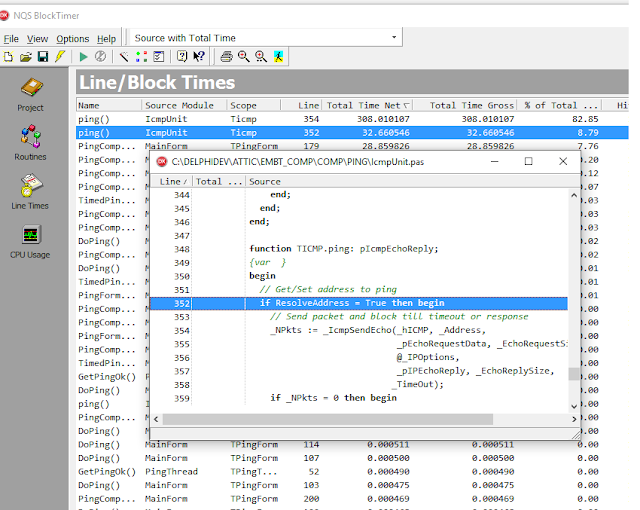

After my program has executed long enough to get a reasonable sample, in my case, just over 5 minutes, I shut it down, and then the timing analysis results are shown:

You can also see a bit of a trend of CPU usage by your program, in total, which can be really interesting, because you might want to know "what is the program doing during these bursts of CPU activity?".

A nice feature built in is that if you have configured your source search path in the NQS project options, you can just double click on a line of interest and see the code:

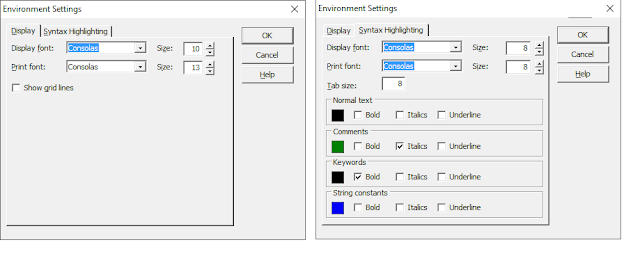

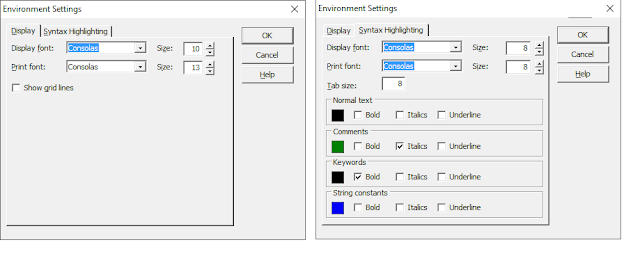

If NQS tools don't show things in the font you wish, you can change the font it uses, there are individual selectable fonts, I change ALL of them to Consolas because it's the one true Code Editor font. If you like the Raize font and you have that one around, you could pick that one. Courier New is more to some other people's taste. If you happen to want Comic Sans, well, you're drunk, go home.

So now I want to jump from Tool to Insight. The reason using tools like this is great, is when the insight clicks in your head. Today I just saw this line and I realized, ResolveAddress is a function, and because there's no mandatory parenthesis in Pascal method invocation, the code here looks like it's just a variable or property check, but it's actually a very expensive procedure. Do I really need to repeat the Resolve on each ping or could my tool just periodically check that the DNS resolution is still working properly, and cache the resolve value, and do multiple ICMP pings to the IP address? In my case, I think I'm wasting a lot of cycles, and loading down my company or customer site's DNS service unnecessarily, and generating a bit of wasteful network traffic. In my next version, even more than making my tool say 10% more CPU efficient, and 10% more network efficient, I might also make it a bit more configurable, say, let the user configure how often to check DNS resolve for my important host is working.

I also think I should write the code above, so that it's clear that the above is not just a check-value but actually that a function is invoked. I really think I need to rewrite lots of internals in TICMP.

But what else could be wrong with my code other than it's wasteful? How about Memory Leaks. So I am now going to switch to Code Watch. Only a few minutes to try it out, and I found that although my background worker thread terminates, it is never freed, and I have a code leak. This tool finds the problem and reports the source line. Additionally it also found some API failures that I may or may not have been aware of, and Win32 resources (thread handle) that was leaked. This is awesome.

I'm going to wrap up now. I hope that all the above impresses you, because it sure impresses me.

Before I wrap up, I'll briefly compare this option to your only other real option for this kind of tool. SmartBear's AQTime suite can do many of the same things that Nexus Quality Suite can do, but Nexus Quality Suite can actually do lots of things that the AQTime suite can't. AQTime is more expensive, at $599 with a very restrictive named-single-user license, and a nasty activation and intrusive anti-piracy copy protection system that I do not very much like, because it won't let me run with a single user license inside a VM. The copy protection actually runs a background Windows service, which detects all kinds of things like virtual machine use, and it disallows program operation inside a VM. And the IDE integration of AQTime just crashed on me the last couple times I used it. I reported these crashes, and over several releases, the crashes never got fixed. Sayonara, AQTime.

So what's the price for NQS? At the promotional sale price of $226 USD ($300 AUD), and with no intrusive copy protection that treats me as a thief, I have no problems recommending EVERY Delphi Developer and delphi using company buy this suite. There are lots of tools, and they work really well. If I had to complain about something it's that the documentation needs some further work, but they are working on that. The product works, and when I find a problem or have a question, the technical support team is great. The price is going up soon, so I recommend grabbing this while it's on sale.

I am planning to write some further review articles to cover this suite further, in particular I believe the automated GUI testing features in the NQS deserve their own separate review, and I think that there are many more profiling techniques that are possible to tease out very complex runtime problems in your system, not JUST to get the data to help make your program faster, or not leak memory, but also to understand complex behaviors by gathering runtime data that lets you see your program running.

In the past year, the amount of new stuff that has been delivered in the NQS is truly astounding. 64 bit support is new. I think this whole extra set of profiling tools is new. I tested NQS on an extremely large application where I work, the product is over 5 million lines of Delphi code including all the in-house and third party component libraries, all the main forms and data modules, and other code. In an earlier version of the tool, I was able to find a crash inside one of the NQS tools. I sent information to reproduce it to Nexus, and in the next release the product was fixed. That's good customer service.

NQS is a tool that deserves a spot in your toolbelt too.

Full Disclosure: I received a complimentary review copy of this product, but my opinion above is 100% my own opinion, and I don't write good reviews for every product I receive a license for, in fact, quite the opposite, if I see something I dislike or I can't use, I'll say that. I'm a working coder, and I have no time for weak tools. I have recommended that my boss buy multiple copies of this tool suite at work, where I believe it would be extremely useful.